Building an AI Reasoning Pane in a Node.js + C# Semantic Kernel Stack

Add a Chainlit-Style UI with .NET and React for Real-Time AI Reasoning

Executive Summary

Recently we had to make architecture decisions regarding the UX for our enterprise agents. This article explores the integration of real-time AI reasoning visibility into a custom chat interface powered by a Node.js/React front-end and a C# Web API backend using Microsoft's Semantic Kernel. Drawing inspiration from Chainlit, an open-source Python LLM orchestration framework, the article proposes architectural patterns and practical implementations for delivering a transparent, agent-based user experience using existing enterprise-friendly technologies like .NET and C#.

Introduction

Chainlit offers a powerful development experience for LLM applications, combining orchestration logic and an interactive UI in Python. However, many enterprise teams use heterogeneous stacks such as React for the front end and C#/.NET for backend services. This article addresses how to replicate Chainlit-style reasoning (seen below in video) and agent visibility in such an environment.

The Problem

Most chat-based AI systems behave as black boxes, making decisions without surfacing the rationale. Chainlit solves this with a real-time UI pane showing reasoning steps (e.g., function calls, tool usage, planner decisions). Many enterprise copilots and agents are written in C#, and replicating this functionality in a Node.js and C# Semantic Kernel environment presents architectural and implementation challenges.

It is worth noting that you can opt for a hybrid approach. You can run Chainlit in the same container or VM as your enterprise agents and embed the reasoning pane in your app. However, for the most flexibility in enterprise interoperability, it may be more important to control the UX native to your React app.

Architecture Overview

Frontend: React-based chat interface

Backend: ASP.NET Core Web API using Semantic Kernel (C#)

Real-Time Channel: SignalR for live updates of agent reasoning

Building the Reasoning Pane

4.1 Capturing Reasoning Steps Semantic Kernel allows step-level orchestration via planners and semantic/skill functions. Developers can extend execution code to emit structured ReasoningStep objects, capturing step type, description, inputs, and outputs. Developers can stream reasoning steps live using built-in SignalR in .NET Core.

4.2 Returning Trace in Response If real-time updates aren’t required, the reasoning trace can be sent as part of the HTTP response. This provides a simplified, post-execution view similar to Chainlit’s transcript pane.

4.3 Real-Time Updates with SignalR SignalR enables server-pushed updates. Developers can:

Create a SignalR Hub (e.g., TraceHub)

Inject IHubContext into the SK execution logic

Emit ReasoningStep updates to the client as decisions occur

4.4 React Front-End Integration The React app connects to the SignalR hub and listens for ReceiveStep events. Each step is appended live to a reasoning pane component using React state.

Streaming vs. SignalR

Semantic Kernel natively supports streaming token output via IAsyncEnumerable, but this doesn’t cover reasoning or planner-level visibility. SignalR provides a complementary channel for full transparency.

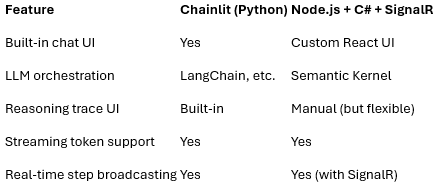

Comparison to Chainlit

Conclusion

Enterprises using Node.js and .NET can deliver Chainlit-style experiences by integrating Semantic Kernel and SignalR with a custom React front-end. This architecture preserves transparency, enhances user trust, and empowers developers to build safe and explainable LLM-based applications. Again, it is worth noting, another option is to host both Chainlit and your React app in the same VM and embed Chainlit into your UX. This option may have limitations, but obviously will be much fast to implement. We’ll share a video of our first implementation in a future post.